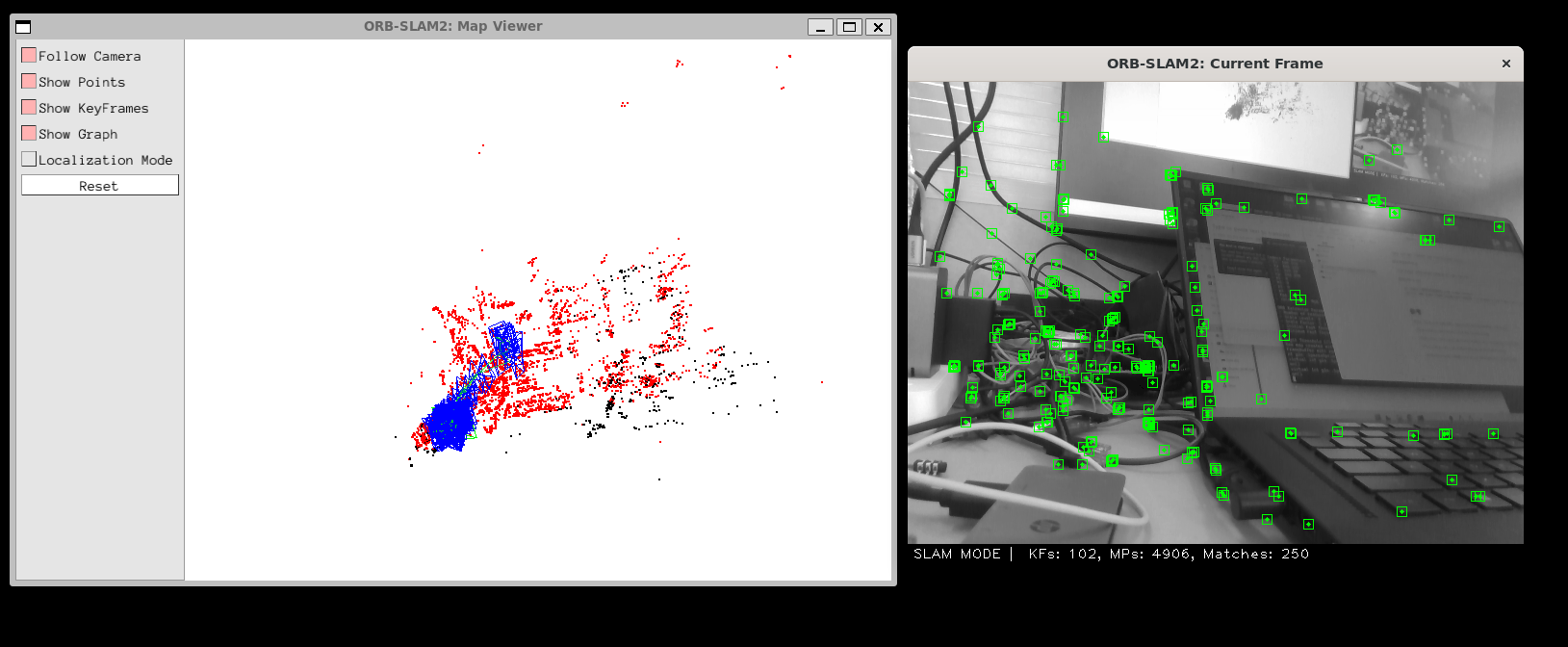

在WSL2 Ubuntu 20.04上连接Realsense D435,运行OpenCV 4.2版本的ORB SLAM2,实现点云构建

绝大多数的步骤请见参考里的前两个链接,此处仅记录实现过程中踩的坑

WSL环境准备

安装Ubuntu20.04,在安装ROS设置key时可能遇到如下报错:

chen@CHEN-ROG:~$ sudo apt-key adv --keyserver 'hkp://keyserver.ubuntu.com:80' --recv-key C1CF6E31E6BADE8868B172B4F42ED6FBAB17C654

Executing: /tmp/apt-key-gpghome.icMaoQqJHi/gpg.1.sh --keyserver hkp://keyserver.ubuntu.com:80 --recv-key C1CF6E31E6BADE8868B172B4F42ED6FBAB17C654

gpg: connecting dirmngr at '/run/user/0/gnupg/d.fq4e4j8nmokbdb8y9hp9q8ya/S.dirmngr' failed: IPC connect call failed

gpg: keyserver receive failed: No dirmngr尝试sudo apt-get install dirmngr无法解决问题,更换如下命令后成功设置key

curl -sL "http://keyserver.ubuntu.com/pks/lookup?search=0xC1CF6E31E6BADE8868B172B4F42ED6FBAB17C654&op=get" | sudo apt-key addPangolin

经过实际测试,我成功编译安装了0.6版本的Pangolin,源代码可从Github(https://github.com/stevenlovegrove/Pangolin/releases/tag/v0.6)下载

sudo apt-get install libxkbcommon-dev

sudo apt-get install wayland-protocols

sudo apt install libglew-dev

cd Pangolin

mkdir build

cd build

cmake ..

make -j

sudo make installEigen

经过测试,3.3.7版本的Eigen可正常工作

OpenCV

Ubuntu 20.04系统内已经安装好了4.2版本的OpenCV,无需再次进行安装

USB连接

安装 USBIPD-WIN 项目(https://github.com/dorssel/usbipd-win/releases)

以管管理员身份开启powershell,执行下面命令可打印出设备总线ID

usbipd list执行下面的命令来共享设备

usbipd bind --busid 4-4执行下面命令来将设备连接至WSL

usbipd attach --wsl --busid 4-4执行下面命令来断开连接

usbipd detach --busid 4-4Ubuntu系统内可通过lsusb命令查看连接情况

ORB SLAM2

OpenCV 4.2版本的可以从Github(https://github.com/dolf321/ORB_SLAM2_OPENCV4)上下载,这个仓库里的代码修正了官方代码的很多问题,绝大多数文件已经添加了#include <unistd.h>,但是在执行build_ros.sh的时候仍然需要检查

编译报错 ‘slots_reference’ was not declared in this scope

在执行build.sh时可能出现这个报错,解决办法是终端执行:

sed -i 's/++11/++14/g' CMakeLists.txt话题修改

realsense2_camera发布的RGB图像和深度图像话题与ORB SLAM代码监听的不一样,这需要去改一下源码,位置大概在ORB_SLAM2_OPENCV4/Examples/ROS/ORB_SLAM2/src/ros_rgbd.cc,将68、69行修改为,修改完成记得重新编译

message_filters::Subscriber<sensor_msgs::Image> rgb_sub(nh, "/camera/color/image_raw", 1);

message_filters::Subscriber<sensor_msgs::Image> depth_sub(nh, "/camera/aligned_depth_to_color/image_raw", 1);通过修改rs_rgbd.launch来改变图像的话题也可实现相同的功能,具体可见参考中的GitHub链接

相机内参

打开两个终端,分别执行下面的命令

roslaunch realsense2_camera rs_camera.launch

rostopic echo /camera/color/camera_infoExamples/RGB-D中新建RealSense.yaml文件,根据内参修改并保存以下内容:

%YAML:1.0

#--------------------------------------------------------------------------------------------

# Camera calibration and distortion parameters (OpenCV)

Camera.fx: 606.2763671875

Camera.fy: 605.233154296875

Camera.cx: 319.2896728515625

Camera.cy: 246.07589721679688

Camera.k1: 0.0

Camera.k2: 0.0

Camera.p1: 0.0

Camera.p2: 0.0

Camera.k3: 0.0

Camera.width: 640

Camera.height: 480

#Camera frames per second

Camera.fps: 30.0

#IR projector baseline times fx (aprox.)

Camera.bf: 30.3

#Color order of the images (0: BGR, 1: RGB. It is ignored if images are grayscale)

Camera.RGB: 1

#Close/Far threshold. Baseline times.

ThDepth: 40.0

#Deptmap values factor,将深度像素值转化为实际距离,原来单位是 mm,转化成 m

DepthMapFactor: 1000.0

#ORB Parameters

#--------------------------------------------------------------------------------------------

#ORB Extractor: Number of features per image

ORBextractor.nFeatures: 1000

#ORB Extractor: Scale factor between levels in the scale pyramid

ORBextractor.scaleFactor: 1.2

#ORB Extractor: Number of levels in the scale pyramid

ORBextractor.nLevels: 8

#ORB Extractor: Fast threshold

#Image is divided in a grid. At each cell FAST are extracted imposing a minimum response.

#Firstly we impose iniThFAST. If no corners are detected we impose a lower value minThFAST

#You can lower these values if your images have low contrast

ORBextractor.iniThFAST: 20

ORBextractor.minThFAST: 7

#--------------------------------------------------------------------------------------------

#Viewer Parameters

#--------------------------------------------------------------------------------------------

Viewer.KeyFrameSize: 0.05

Viewer.KeyFrameLineWidth: 1

Viewer.GraphLineWidth: 0.9

Viewer.PointSize:2

Viewer.CameraSize: 0.08

Viewer.CameraLineWidth: 3

Viewer.ViewpointX: 0

Viewer.ViewpointY: -0.7

Viewer.ViewpointZ: -1.8

Viewer.ViewpointF: 500运行

添加以下环境变量

export ROS_PACKAGE_PATH=${ROS_PACKAGE_PATH}:/home/(修改为你的路径)/ORB_SLAM2_OPENCV4/Examples/ROS安装一个依赖包

sudo apt-get install ros-noetic-rgbd-launch启动深度相机节点

roslaunch realsense2_camera rs_rgbd.launch启动ORB SLAM

cd /home/(修改为你的路径)/ORB_SLAM2_OPENCV4

rosrun ORB_SLAM2 RGBD Vocabulary/ORBvoc.txt Examples/RGB-D/RealSense.yaml参考

https://blog.csdn.net/2301_80007161/article/details/137022723

https://github.com/ppaa1135/ORB_SLAM2-D435

https://blog.csdn.net/chengyanshan0701/article/details/101037947

针不戳

李昊阳 2024-07-09